Disclaimer: This article was not written using AI!

Billions of voice interface enabled devices have been shipped in the last 5 years and voice assistants are used by millions of consumers across the world. Assistants such as Alexa, Google Assistant and Siri are household names, but is interest starting to subside? How might the industry reignite the interest?

The voice assistant market explodes.

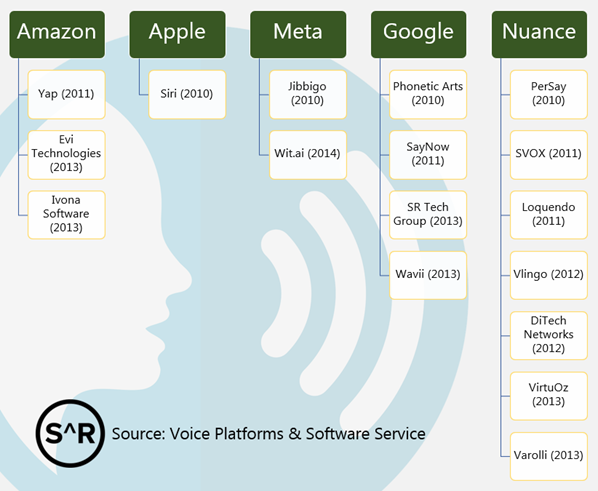

In the early 2010s we saw 5 vendors start to gobble up voice interface tech companies, these tech behemoths saw the potential of voice assistants and invested heavily to make sure that they would not be left behind.

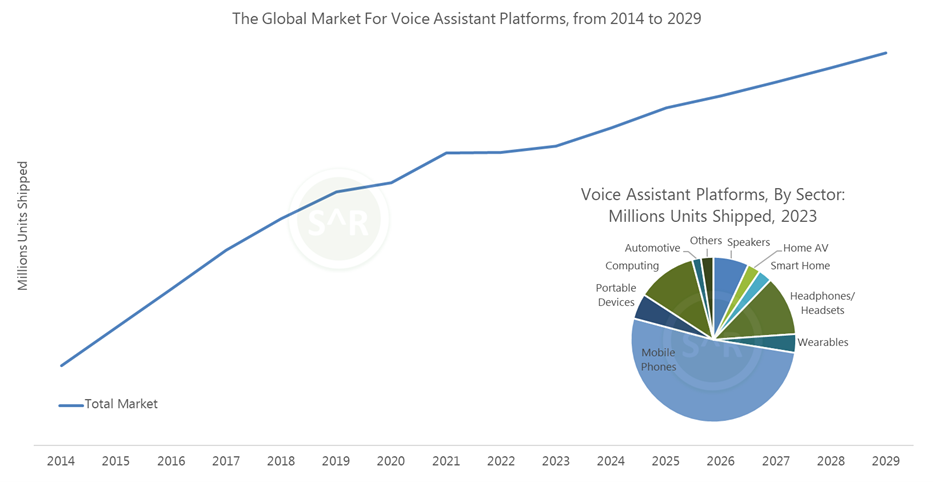

Since SAR Insight published its first report on the market back in 2016 titled “Always On / Always Listening, Sound Enhancement & Voice Interface Technologies”, voice interfaces have become well established across several markets. In that report we stated, “while there is a long way to go before touch is usurped by voice as the main interface, it seems inevitable that this will happen in the next 5-10 years as technologies advance”. Over the last 7 years voice technology has become well established across several markets, perhaps most obviously in the home where the smart speaker market went from almost zero in 2014 to over 100 million units per annum by 2019. We now predict that the smart speaker market has reached its peak, and one major contributing factor is that there has been a waning of interest from consumers in the use of voice, as it has not returned the value that was expected.

The Plateau is reached.

In the 2016 report we also stated “Today these platforms are siloed, with each platform vendor pursuing its own course and trying to outdo the competition. It seems likely that this will continue for many years to come but longer-term standards are expected to be devised to enable more cross platform interaction and new cross platform assistants are likely to come to market”. When the report was written, we (and many in the industry) felt that interoperability was a few years away, unfortunately this has not happened as soon as we hoped.

The lack of interoperability is one of the main reasons why voice has not lived up to its promise. Most assistant platforms are geared towards generating revenue in different ways, such as selling products, enabling search, etc. and this has kept a wall gardened approach. Yes, those companies that acquired others and spent billions on developing these would want to generate revenue from their efforts, but you could argue that this has not been successful and that by working together the whole industry would be much more advanced and profitable by now. Maybe we would have the conversational AI assistant that consumers were hoping for that emulated the world anticipated by films such as “Her”.

Security has also been an overarching factor since the outset of smart home technology offerings. In addition to privacy concerns over video feeds from doorbells and security cameras, voice has also been a talking point. Processing has tended to be conducted off-device in the cloud leaving it vulnerable to external threats. There have been several attempts to move to more local processing, however most current voice assistant platforms are designed to process in the cloud, which enables data to be used and sold. So, as with many things you have the commercial need at loggerheads with the wishes of consumers.

With most markets using voice applications, assistants have been slow to recognise regional dialects and it is only in the last few years that Alexa has been trained to understand these. The lack of accuracy can be troublesome, particularly if you are trying to provide important instructions i.e., operating a satnav.

There have always been challenges involved with voice pickup in noisy environments. There has been constant focus on addressing these issues. There is an emphasis on microphone type, microphone placement and machine learning techniques to try and solve far-field pickup.

Where do we grow from here?

The basic usage of voice to change, or pause a song is more than enough functionality for most. Checking the weather or searching for recipes are commands which have lost their appeal over time which is why there is the requirement for a market disrupter; AI is becoming ever more prevalent, particularly since the emergence of platforms such as Chat GPT.

AI can be used in several ways to help enhance the voice user experience. Voice synthesis can become extremely detailed and realistic with the incorporation of Neural Networks and Deep Learning. When a human speaks, they provide levels of expression, intonation, and volume changes. With the use of this type of data learned by Neural Networks, AI can convey voices which have more natural, humanized qualities.

Contextual awareness is a critical ability which enables smart devices to learn information about the user and environment. Data combined from multiple sensors can then be utilised to influence a device’s response to the user, to be more helpful. When using voice and receiving responses from the device pertinent to the user’s location or activity, it can become a part of daily routine and become more complex as it undertakes daily operation learning continuously.

We are entering the age of headphone 3.0, often referred to as “ear-worn computing”, utilising edge AI processing. This is a perfect use case for the next generation of voice assistants, using generative AI, alongside more traditional voice algorithms to create the ultimate in-ear companion. We comment on the emergence of headphone 3.0 in an article published in EE Times (access it here).

Although each end-market can use AI in diverse ways, for where a virtual voice is present, it will feature realistic sounding interactions. Lack of personality and human-like tonality can mean that some voice assistants have remained unchanged from a sound perspective for several years. Movies such as Marvel’s Iron Man portray voice assistants which is indistinguishable from a real person’s voice. This is a road in which we will be travelling down, however, there will be some scepticism from users who believe these voice assistants are becoming far too human-like.

The future success of voice assistants will be driven by the integration into generative AI platforms such as ChatGPT. Chat AI platforms can offer multiple modalities of interaction, such as text, voice, image, and video, and the most suitable mode can be used for their situation and purpose. We believe the next generation of personal AI assistants will use voice as the main way to interact with its human “master”, as this is the most natural form of communication. This will then be augmented by other modalities as needed.

Chat AI should not be seen as a replacement or competition for human communication, but rather as a complement and enhancement that can enrich and empower our conversations.

SAR forecasts a 5% CAGR increase for the voice assistant platforms total market. When we analyse the data at a more granular level, we see markets within this sector such as headphones & headsets, smart home and automotive all with CAGRs surpassing 10%. This is indicative of uses of AI in these devices to help boost user convenience.